Machines Hacking People: Contact Center Fraud in the AI Era

With the rising tide of voicebots and AI-powered deepfake technology, identity security measures are being pushed to their limits – and only the most sophisticated defenses will help businesses survive the artificial intelligence era.

There is tremendous concern in the digital identity market about the use of AI tools to compromise security in industries like financial services, the public sector, healthcare, telecommunications, and many others.

In these sectors, heightened industry awareness around the vulnerability of contact centers to AI-driven fraud technology, like deepfakes and voicebots, is also gaining momentum.

AI-powered conversational systems (voicebots) based on large language models (LLMs) can be used to steal and fabricate customer identities, hack into contact center systems to access PII (personally identifiable information) and other sensitive data, and cause privacy breaches that can easily sink even the most liquid businesses.

With bad actors going the extra mile to victimize customers, security executives need to go even further to keep their organizations’ contact centers safe from deepfake fraud. Modern identity security strategies and market-leading digital identity vendors can help any business outpace today’s scammers and the ever-sophisticated technologies they use.

Fraudsters in the AI era

The world in which contact centers are operating is changing rapidly. Contact centers, often seen from a fraudster’s perspective as the “soft underbelly” of an organization, are under continual assault from hackers. Social engineering attacks using compromised personal data (e.g., SSN, date of birth, mortgage balance) are common – and increasingly successful.

Generally, when trying to compromise a bank, for example, through a contact center, a fraudster would have to participate in a phone call, answer security questions (based on compromised data), and convince the contact center operator they are a genuine user.

Cybercriminals can even commit identity fraud in contact centers through a company’s IVR (Interactive Voice Response) system, stealing assets or credentials without ever having to speak with a live operator.

What is different now, though, is the emergence of two AI-powered technical capabilities that make these attacks even easier and eminently more scalable: deepfake generators, in the form of voicebots that can accurately mimic a human voice, and conversational systems that can control a conversation and interact intelligently using stolen data (think of systems, of which there are many, like OpenAI’s ChatGPT or Meta’s Llama 2). Deepfake voice generators can mimic a human voice in real time, and voicebot systems can hold a human-like conversation with a contact center agent or an IVR.

Anatomy of a contact center deepfake attack

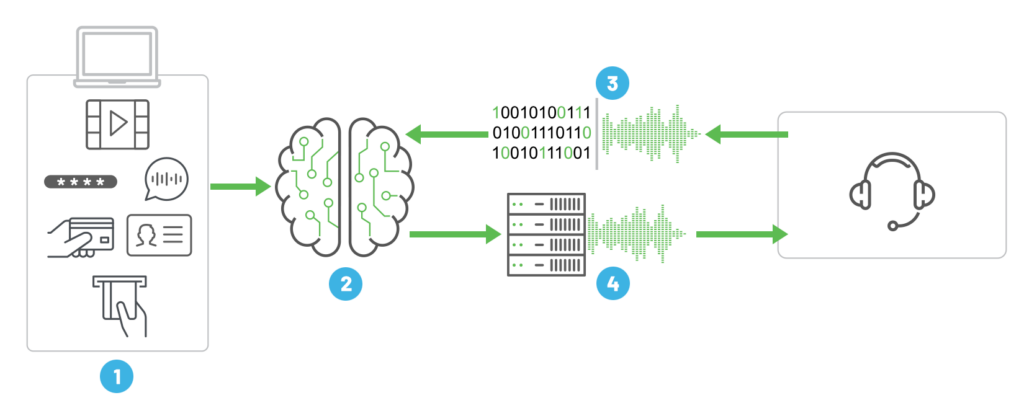

The following diagram shows the four core components of a sophisticated voicebot deepfake attack that can bypass many of today’s contact center security processes.

- Compromised data (1), which is readily available on the dark web, is fed into a generative AI “conversation orchestrator” (2).

- This orchestrator (2) acts as the fraudster’s “brain” and orchestrates, in real time, a conversation with the contact center (depicted above as a headset).

- An Automated Speech Recognition System, or ASR (3), is able to understand the agent’s questions, which could include something like What are the last 4 digits of your social security number? and passes that data to the AI orchestrator (2).

- The orchestrator processes this question and feeds an accurate response, based on the compromised data (1), back to the contact center via a voicebot (4).

- The voicebot (4) contains a real time deepfake voice generator that creates a voice indistinguishable from a live human voice.

With these technical advancements, it’s important to note that voicebot output not only sounds natural, but can strike an extremely convincing, conversational tone (similar to human speech) when responding to a contact center agent. With the help of the orchestrator (2), the system maintains context between questions and reacts and responds easily to natural language interactions.

In terms of the orchestrator, ChatGPT and Llama 2 are just two examples of the hundreds of generative AI systems that exist today, and while it’s unlikely that hackers would be able to manipulate these systems, other open-source systems can be easily downloaded and trained by fraudsters for cybercrime. There is a constant stream of deepfake systems being rapidly developed by hackers. Their proliferation will soon lead to a tsunami of fraud – unless organizations put appropriate countermeasures in place to protect against deepfakes.

Deepfakes and presentation attacks

Deepfakes are a form of presentation attack, or an attempt by bad actors to spoof an authentication system using fake likenesses of humans – e.g., through masks, photos, videos, or voice recordings – to pass themselves off as genuine and commit identity fraud.

Technology like liveness detection can help secure businesses against presentation attacks and prompt users to use step-up authentication as a way to further protect their identity. Liveness detection can greatly mitigate fraud in CIAM/IAM systems and generate hefty cost savings.

As the AI fueling them becomes more accessible, deepfakes are going to become more commonplace. Once thought of as an attack reserved for celebrities and people in the public eye, deepfake voice generators are growing better and better at reproducing believable, manipulated content with less input and effort (time, cost) required.

Thanks to the development of technologies like Large Language Models and deepfake generators, we are now entering an era where the ability for hackers to use machines to mimic humans is going to get both exponentially more sophisticated and easier to scale.

In the past, we’ve had people hacking machines and machines hacking other machines – but, for the first time, we’re entering an era where machines can hack people.

Hidden signals and xDeTECH

To keep up with the security threats posed by machines stealing identities through manipulated content and the scammers behind these efforts wreaking havoc on business reputation and customer trust, security professionals need to arm themselves and their organizations with the innovative, market-leading technology and solutions required to mount a robust defense.

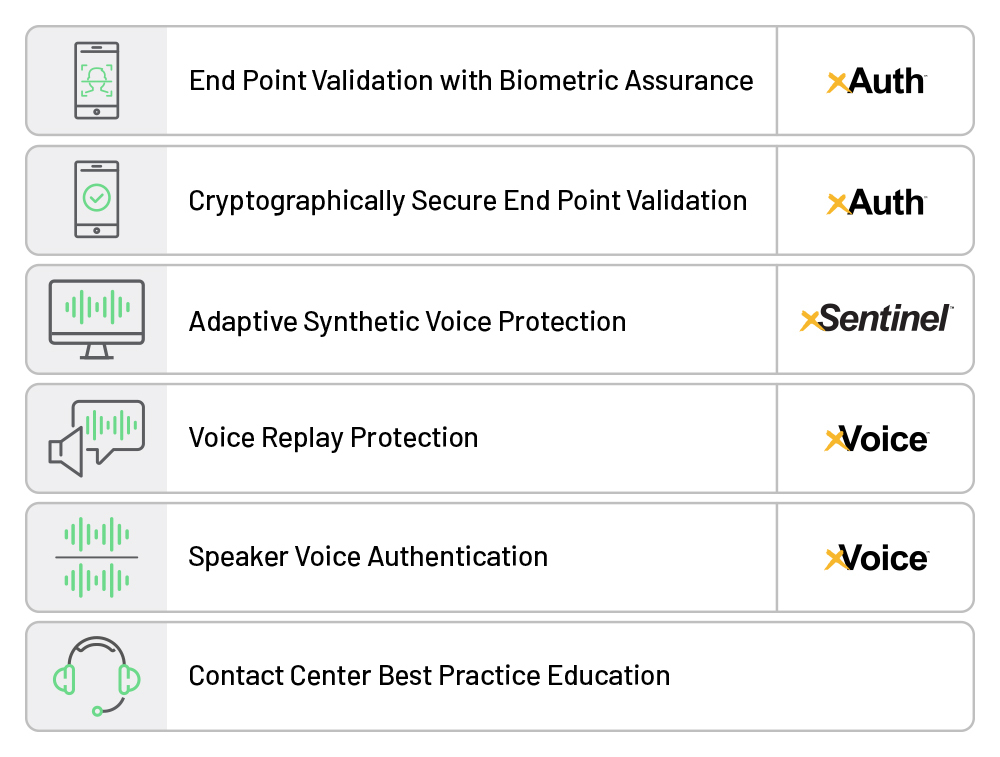

At Daon, we’ve developed defense capabilities to help our customers defeat these attacks, including voice biometrics, voice replay detection, and convenient MFA (multi-factor authentication) capabilities, like FIDO.

Our comprehensive approach shown in the diagram below outlines solutions we provide today and introduces a new technology, xDeTECH™.

Recently previewed after several years of development, xDeTECH is an AI-powered product that effectively identifies hidden artifacts in deepfake, voicebot, and other synthetically generated audio which indicate the audio is likely not a human. These artifacts (“signatures”) cannot be detected by the human ear but are present in fake audio signals.

In essence, xDeTECH is a real time anti-virus/firewall for deepfake voices.

No matter how you process incoming voice communications, xDeTECH can be leveraged as a first line of defense to help identify potential fraud for further action, detecting synthetic voices within seconds of a call starting. Our product provides a “genuineness” score for each incoming call, giving organizations the data they need to take an appropriate course of action (e.g., step up authentication, call termination, etc.), thus managing the balance between security and user experience, minimizing fraud, and optimizing contact center operations.

Best conceptualized as an advanced, machine learning-based anti-virus or firewall for deepfake voices, xDeTECH is complementary to the existing products a contact center might be using and yet does not require that a customer have other Daon products installed. It also doesn’t require any enrollment processes, making it frictionless and seamless for customers interacting with a contact center.

The xDeTECH system keeps pace with the changing fraud landscape through machine learning, continually adjusting and improving its ability to detect synthetic speech systems. xDeTECH can be deployed for all calls in the contact center (whether or not a business is using voice authentication) and can sit alongside any other security and fraud management products an organization is using.

What’s next?

Long gone are the days when hackers typed away at clunky desktops in dark basements.

The birth of AI, though synonymous with 21st Century society, actually took place in the 1950s. While this is a common misconception, it speaks to, perhaps, a wider misunderstanding of this technology. AI has only gotten smarter, stronger, and more difficult to detect over the many years humans have been feeding it information, fine tuning its systems, and expanding its range of uses. The reality is that AI is a technology inherently marked by its exponential growth and learning capabilities: from its inception to its first large leap in tech (expert systems), around 30 years passed. But now, these leaps are being measured exponentially – over months, not decades.

In short: AI isn’t going away, and it will only get smarter, more scalable, and more accessible to wider groups of people.

The proliferation of contact center fraud is an undeniable effect of AI’s recent advances. Fraudulent LLM voicebots and deepfakes – the former of which are now being sold online to thousands of people – have altered the way we do business and the way we exist in digital spaces. When these technologies are used for good, they can do things like bridge gaps in financial inclusion and accessibility, provide better access to maternal healthcare and more positive health outcomes for mothers in low-resource regions, and even help creators work faster and across more mediums.

While fraudsters continue to exploit the era of AI with malicious intent, it’s up to those who see the potential of this technology for positive change to defend our businesses, customers, and data.

Learn more about protecting your business from AI attacks with xDeTECH today.